Architecture and specifications

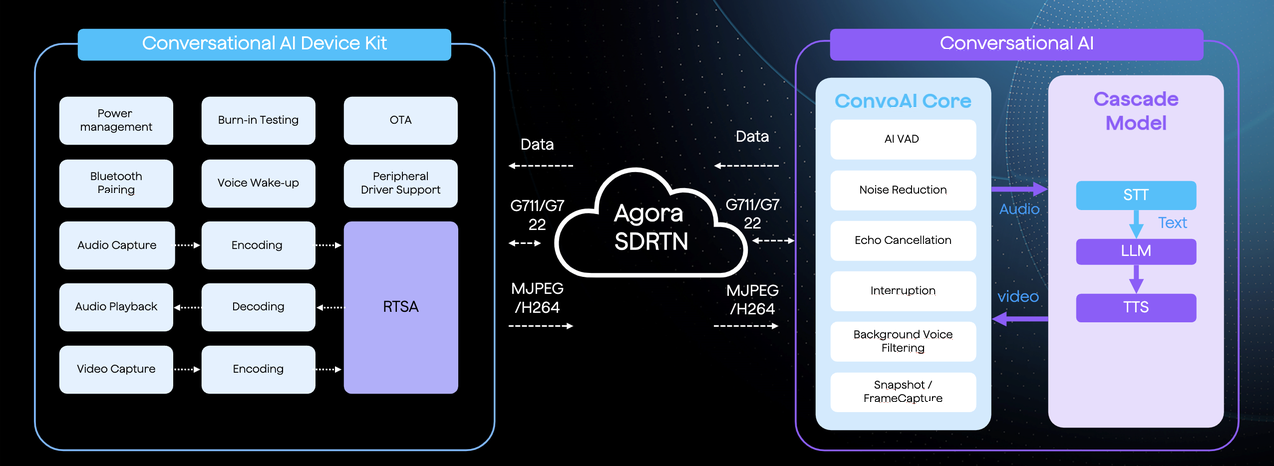

The Convo AI Device Kit solution is built on Agora's Media Stream Acceleration (RTSA) or IoT SDK and Conversational AI Engine. RTSA is Agora's RTC client product designed specifically for the IoT industry, providing the real-time communication foundation for voice AI interactions.

Solution architecture

The Convo AI Device Kit solution consists of two main components connected through Agora's Software-Defined Real-Time Network (SDRTN®).

-

Convo AI Device Kit

The hardware device handles local processing including power management, Bluetooth pairing, voice wake-up, OTA updates, and peripheral driver support. It captures audio and video through dedicated modules, encodes the data, and transmits it through RTSA (Real-Time Streaming Acceleration) to Agora's SD-RTN. The device also receives and decodes audio/video responses for playback.

-

Conversational AI Engine

The cloud service processes the incoming audio and video streams. It has two main components:

-

Convo AI Core: Handles AI-powered voice activity detection (VAD), noise reduction, echo cancellation, interruption detection, background voice filtering, and snapshot/frame capture

-

Cascade Model: Processes the audio through Speech-to-Text (STT), sends the text to a Large Language Model (LLM), and converts the response back to speech using Text-to-Speech (TTS)

-

The system uses G.711/G.722 audio codecs and MJPEG/H.264 video codecs for efficient real-time transmission between the device and cloud services, enabling low-latency conversational AI interactions.

Hardware architecture

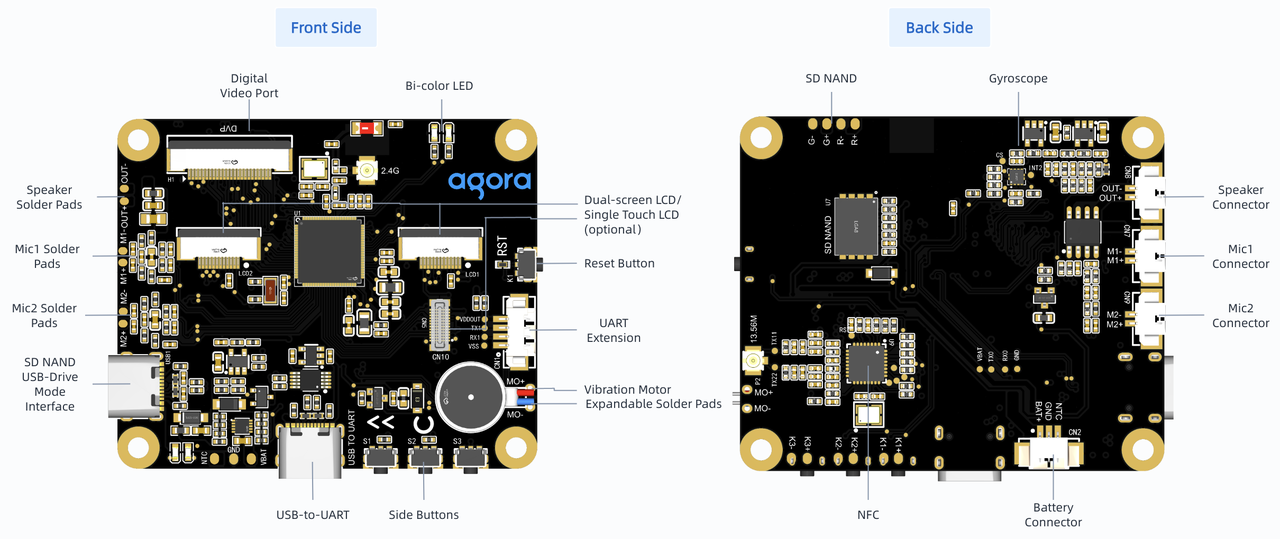

The R1 Kit integrates multiple hardware components to enable multimodal AI interactions:

Key components:

- Dual-screen LCD/Single touch LCD (optional)

- Digital video port

- Bi-color LED indicator

- Dual-microphone array with solder pads and connectors

- Speaker with solder pads and connector

- Gyroscope for motion sensing

- SD NAND storage

- Battery connector for portable power

- NFC support

- USB-to-UART interface

- Reset button and side buttons

- Vibration motor (expandable)

Software architecture

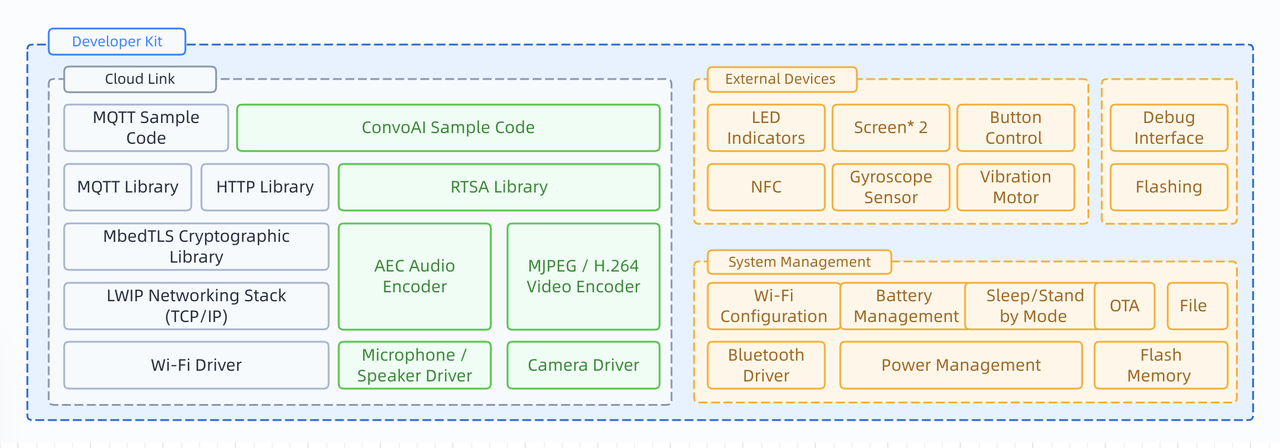

The software package provides a complete development framework for building conversational AI applications:

Performance specifications

The Convo AI Device Kit delivers ultra-low latency performance with robust audio processing capabilities across a global network.

-

Latency

- Conversation latency: As low as 650ms

- Interruption response: As low as 340ms

- Global network end-to-end latency: Median as low as 76ms

-

Audio processing

- Noise suppression: Filters 95% of environmental noise

- Packet loss resistance: Up to 80% packet loss tolerance

-

Coverage

- Network coverage: 200+ countries and regions

- Language support: 35+ languages

Hardware specifications

The R1 kit is based on the RiseLink BK7258 chipset and includes open-source hardware and software resources.

-

Audio capabilities

- Dual-microphone array with local AEC (Acoustic Echo Cancellation) algorithm

- Precise audio capture with echo interference elimination

-

Visual and sensor capabilities

- Integrated camera for visual recognition

- Gyroscope for motion sensing and gesture control

-

Power management

- Battery power support

- Deep sleep mode for mobile scenarios

-

Connectivity

- Bluetooth provisioning

- Wi-Fi 6 support for one-click cloud service connection

-

Display

- Dual-screen collaborative display

-

Interaction modes

- Multi-channel input: voice, touchscreen, and gyroscope (gesture/tilt control)

- Custom wake word support

- Real-time continuous conversation

- LLM real-time visual reasoning

Platform compatibility

The Convo AI Device Kit supports various mainstream communication standards and chipsets.

-

Supported chip manufacturers

- RiseLink

- Espressif

- Unisoc

- Ingenic

- Rockchip (RK)

- Sigmastar

-

Communication standards

- Wi-Fi

- LTE Category 1

-

Additional support

- Image Signal Processor (ISP) chips

For specific supported chip models and compatibility details, contact technical support.

Advanced audio algorithms

The Convo AI Device Kit employs specialized algorithms to ensure accurate voice recognition and natural conversation flow in challenging environments.

AI noise reduction

Filters 95% of environmental noise, enabling accurate recognition even in challenging environments like coffee shops and train stations, preventing interaction errors.

BHVS and voiceprint algorithms

- Background Human Voice Separation (BHVS): Filters background voices in multi-person conversation scenarios

- Voiceprint recognition: Locks onto the primary speaker in multi-person conversations

Graceful interruption algorithm

Enables AI to detect user interruption intent in real-time and precisely determine when to speak and when to stop, restoring natural conversation rhythm.

Weak network resistance

Maintains uninterrupted voice interaction even in challenging network conditions like subways and basements, with 80% packet loss resistance capability to maintain conversation continuity.